Article

Implement an integration platform to drive business transformation

This article will explore what an integration platform is, what problems they solve and how they can power real business transformation

Contents

- What Is An Integration Platform

- A tool to connect applications and data sources across the organisation

- A toolkit for implementing fault tolerant system integrations.

- A gateway to accessible, standardised, reusable data

- A standardised approach to securing all accessible data assets

- A data quality firewall to increase data quality

- A matching engine

- A transparent data transit tool backed by rich telemetry

- Why Does Your Company Need An Integration Platform to Drive Business Transformation?

- Eliminate point to point integrations

- Reduce data quality problems

- Make data accessible across the organisation

- Create business agility with re-usable APIs

- Legacy applications not designed to support modern workloads

- Process automation increases efficiency and reduces data errors

- De-risk application procurement/retirement

- Summary

- What next?

What Is An Integration Platform?

A tool to connect applications and data sources across the organisation

An integration platform connects data silos and securely exposes data in a consistent format over consistent transit protocols using consistent authentication mechanisms. This consistency leads to greater efficiency in the delivery of digital projects and replacing data silos with connected datasets empowers organisations to:

- Make data driven decisions

- Automate key business process

- Increase data quality

A toolkit for implementing fault tolerant system integrations

Most organisations have an IT landscape that is constantly evolving. Since technology is fast moving it is quite normal to find very successful businesses that depend on old IT systems that are the heartbeat of the business with newer systems being procured to support specific business operations and to modernise interactions with customers in line with current industry norms.

As new systems are added to the IT landscape some might be on premise and others might be cloud based SaaS products. We can therefore find that our systems extend beyond the perimeter of our corporate LAN meaning we must confront the first of the fallacies of distributed computing as defined by L. Peter Deutsch:

Fallacy #1 - the network is reliable.

Regardless of whether our internal networking equipment fails, a data centre succumbs to a DDoS attack or a global DNS outage takes the internet offline, the end result is the same, our systems cannot communicate. There are many possible causes of such an outage and often they are outside of our direct control.

The network is not the only likely failure point. Imagine a company that has an on-premise ERP system that is covered by an SLA meaning that all upgrades are performed at a convenient time for the company and to date maintenance has been straightforward. Over time more systems have been added to the company's IT landscape, especially cloud based SaaS services, and in particular some that are based in different geographic regions. Some of these SaaS products may have periodic releases to add features and fix bugs, but the customer often has much less, if any control over the maintenance windows.

If a company strives to be data driven by connecting data from previously siloed systems, then it should adopt an implementation platform that assumes such interruptions in connectivity between systems will occur, for whatever reason, and provides appropriate mechanisms to handle such integration faults, such as automated retries, alerts and remediation mechanisms.

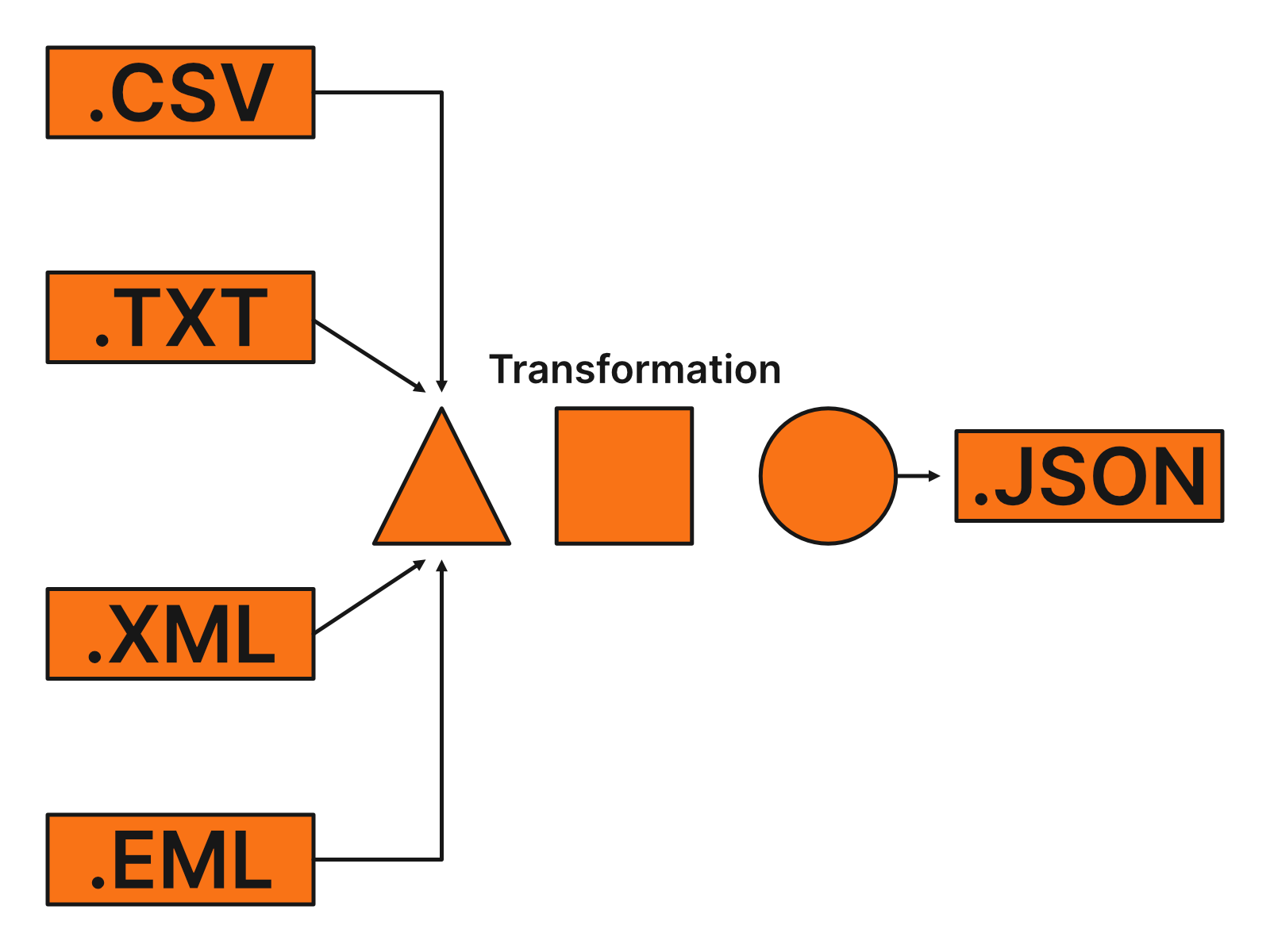

A gateway to accessible, standardised, reusable data

Most organisations have valuable data stored in many different formats and this variety can be a barrier to realising full value from your data assets. Having multiple formats introduces several complications:

-

Innovation is hindered as developers experience increased cognitive load remembering the parsing nuances of each format.

-

Reporting efforts can be hindered if data is not stored in a structured format.

-

Systems may not be able to work with data from other systems if it is not produced in a compatible format.

An integration platform makes it easy to transform and consume any piece of data in the format most appropriate to the task at hand.

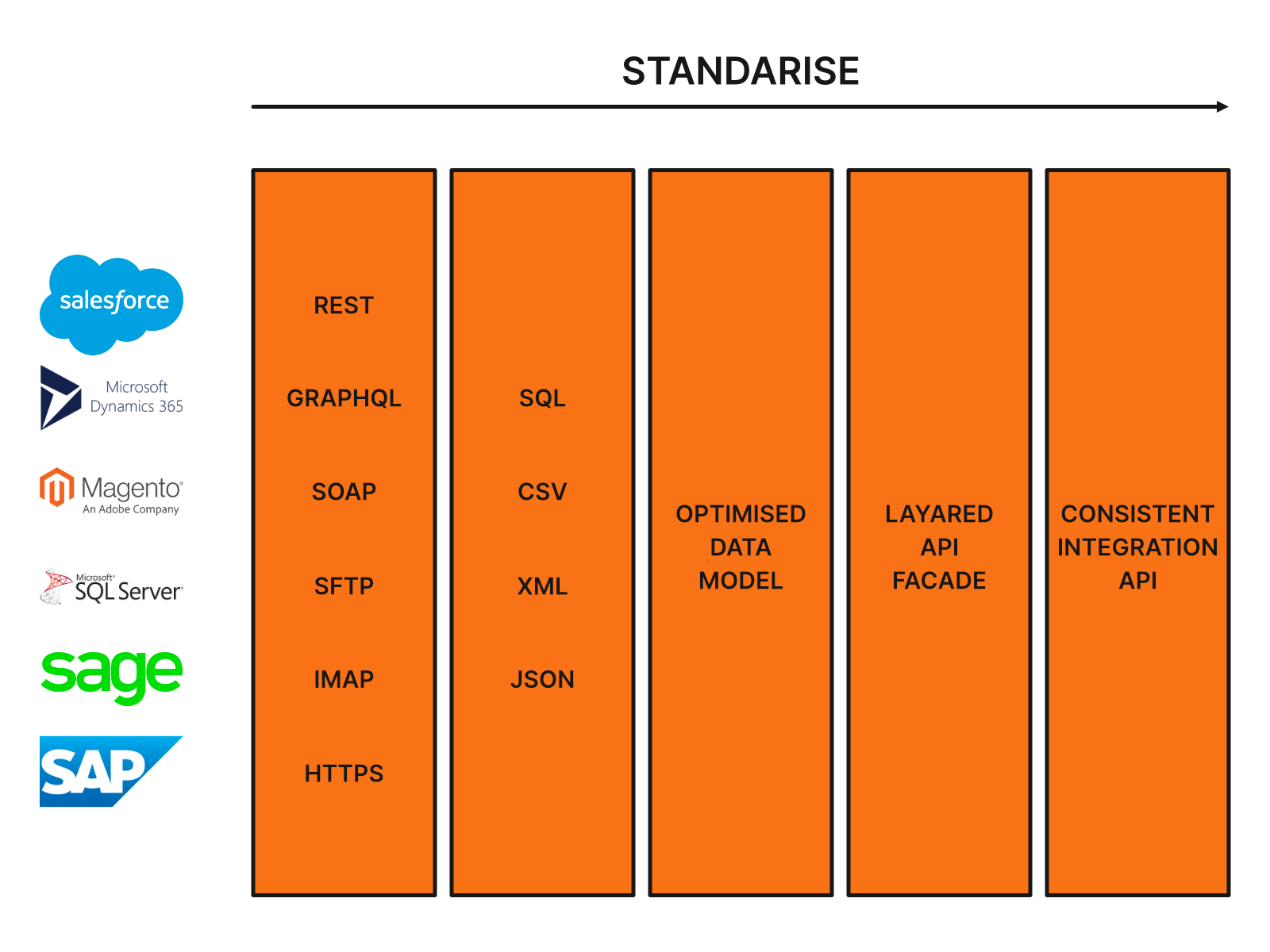

It isn't only data formats that differ from system, but also the protocols and standards that are used to request and transport data, for example:

| Flat file | Web API | Message Broker |

|---|---|---|

| FTP | REST | AMQP |

| SFTP | SOAP | JMS |

| IMAP | GRAPHQL | |

| POP | ||

| HTTP | ||

| HTTPS |

Different IT systems dictate different protocols that must be used when interacting with them. For example web based APIs used SOAP in the early 2000s, REST throughout most of the 2010s and as we enter the 2020s GraphQL is emerging as the preferred standard. When relying on email to transport files as attachments some servers use POP, others IMAP. When transferring files some servers might accept FTP but for security reasons it is more likely to be SFTP. If you have a message based architecture perhaps you use AMQP or JMS.

The point is that all of these protocols require a different set of commands to send and receive data. An integration platform saves your technical team from having to focus on the implementation details of each of these transport protocols and data formats leaving them free to focus on higher value tasks.

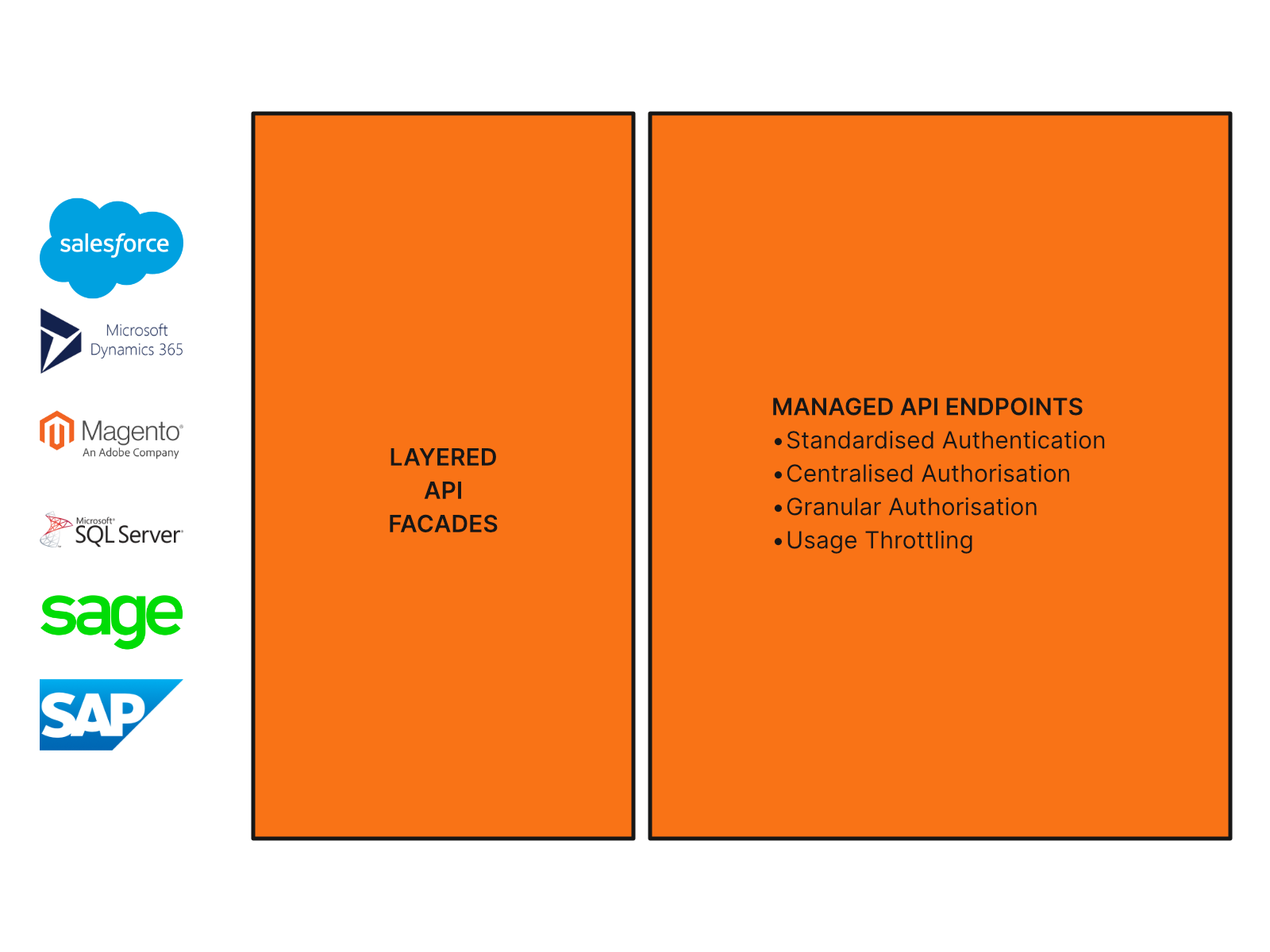

A standardised approach to securing all accessible data assets

In the siloed IT landscape some things are more straightforward. One of those things is controlling access to data, which can be controlled directly within most enterprise grade systems. For example, in an ERP system the merchandising team could be allowed to see product data but only the finance team can see transaction data.

But what happens when we connect the ERP system, the CRM and the customer portal? Now who controls access to customer data? What if we make that combined dataset available internally for reporting purposes, who controls the level of access that different users can be granted?

Thinking about data in terms of the individual systems it resides in is quickly going to become a barrier to gaining full value from that data. Not only that but it hinders innovation if our technical implementation teams are forced to jump through different authentication hoops for each system they must consume data from. Further, the administration of data access becomes complicated if we are managing multiple users across multiple systems with different access rights in each of those systems.

A data governance framework provides the organisational structure to decide who should be able to access what data and an integration platform provides the technical tooling to facilitate it.

Looking beyond user access concerns, a standardised approach to securing all of your data assets allows you to protect your mission critical systems from overload by easily applying usage throttling wherever needed.

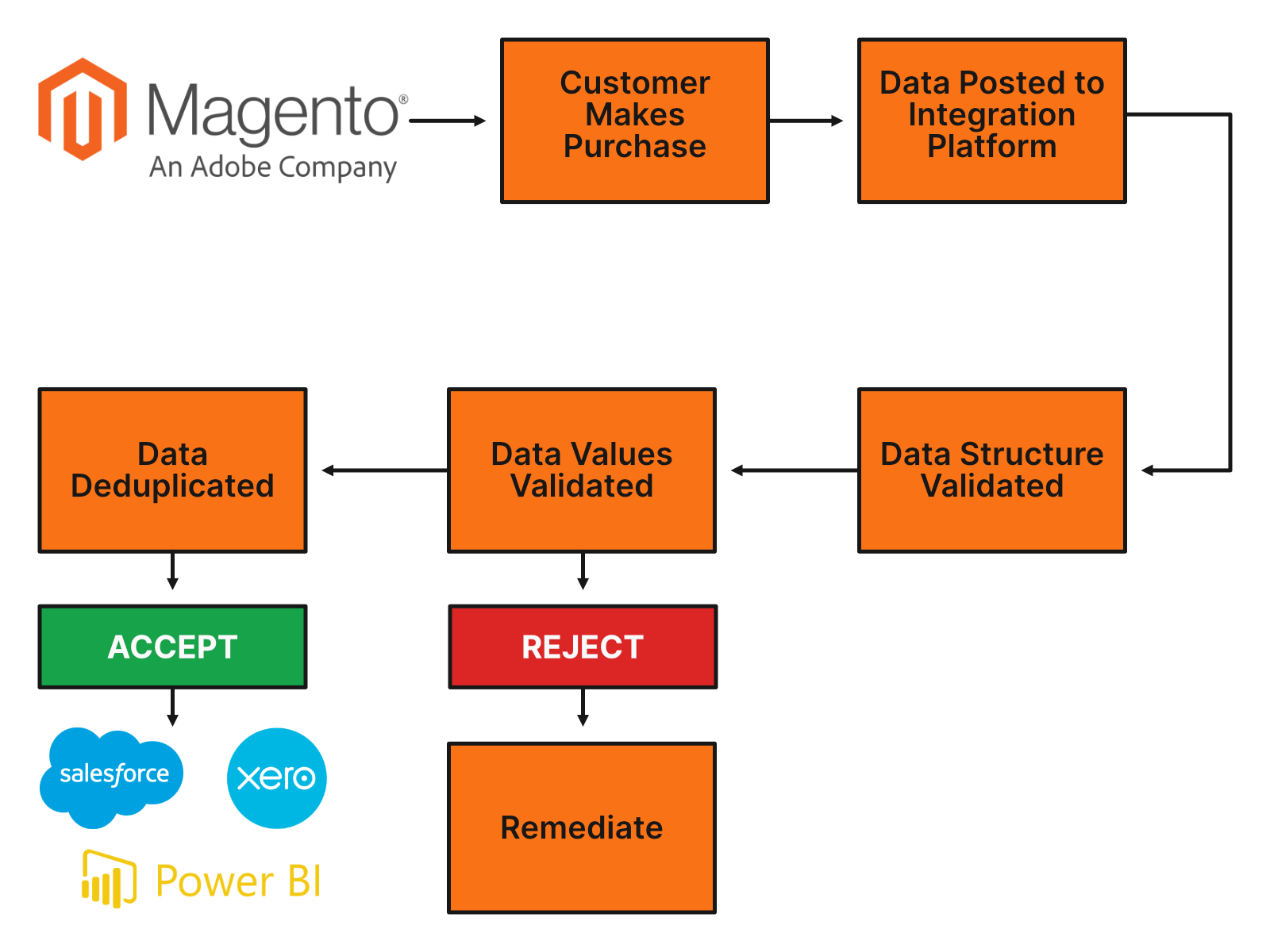

A data quality firewall to increase data quality

Dirty data can derail digital transformations.

A data quality firewall ensures that any data flowing between systems and being used by team members is accurate and trusted.

If teams cannot trust the quality of the data they are working with then they will either:

- Not use the data and potentially miss valuable business opportunities

- Waste an awful lot of time reconciling data and building various checkpoints to satisfy trust requirements

A data quality firewall aims to assure the quality of data at the earliest possible moment. Any data that is allowed to flow through the integration platform should be considered accurate and trustworthy so that validation and reconciliation logic does not end up being dispersed across the entire IT landscape, which quickly becomes unmanageable.

A matching engine

It is common for organisations to experience some of their data assets overlapping two or more systems. This can happen when the parties that an organisation interacts with can take on multiple roles. To demonstrate this, let us imagine a fictional theme park operator with theme parks across the country.

The operator generates revenue in the following ways:

- Selling day tickets at specific theme parks

- Selling annual passes that grant entry across all parks over a whole year

- Selling merchandise via onsite gift shops

- Selling food and drink onsite

- Selling park tickets and merchandise via an online, ecommerce shop

- Selling kids party packages

Now let us imagine a person, Ali, who loves theme parks. Ali has had the following interactions:

- Monday to Friday Ali works at the local theme park

- In February Ali purchases day tickets online for Theme Park A

- Ali buys some lunch at Theme Park A

- In May Ali purchases day tickets online for Theme Park B

- Ali buys some lunch at Theme Park B

- After enjoying both days out, before leaving Theme Park B Ali visits membership services to upgrade to an annual pass

- Ali takes her family to the gift shop to buy some sweets, a teddy bear and a pencil

- A few months later Ali phones Theme Park A to book a kids party package

Ali booked tickets for Theme Park A on a mobile phone, which auto completed the name fields as Ali Smith.

When Ali booked tickets for Theme Park B it on a laptop, which auto completed the name fields as A Smith.

By the time Ali visited Theme Park B and upgraded to an annual pass the family had moved house and so a different address to that on the online booking was given.

Ali changed to a new email address just before booking the kids party because the old ISP went out of business.

Without a matching engine it is very easy for these to look like unrelated transactions that create unrelated data in the following types of system:

- Employee

- Visitor

- Member

- Customer

That creates some problems:

- If Ali needs to update personal details it will probably only be done for a single persona, the one that is currently motivating the update. Then in the future it is likely the process will need to be repeated when interacting as a different persona.

- It isn't possible to recognise just how valuable Ali is to the company because the transactional data is fragmented and therefore the relationship is not nurtured appropriately.

With a matching engine those non-identical records from different systems can be connected in order to fully understand the relationships and interactions made with an organisation.

A transparent data transit tool backed by rich telemetry

As you connect your previously siloed systems you will be transporting more and more data across networks and through interfaces and it is vital that your integration platform has sufficient metrics and management tooling to make it operationally viable.

In a typical integration data is produced, validated, transformed, enriched and consumed. There is potential for something to fail at each step, either due to data errors, infrastructure instability or system downtime and your integration platform must not only allow you to fault find but also to spot potential issues before they become problems.

An integration platform should provide the following monitoring for efficient ongoing operations:

- Request rates

- Response times

- Failure rates

- Exceptions

- Performance counters

- Trace logs

- Custom events & metrics

The data must be presented in a way that makes it easy to analyse, cross reference and pin point faults:

Why Does Your Company Need An Integration Platform to Drive Business Transformation?

Eliminate point to point integrations

When a company first realises there is value in programmatically synchronising some data between two systems it is common for a project to be initiated along the lines of: "Synchronise customer data changes in System A to System B".

Time and money are typically motivating factors in IT projects and so a solution is found that can be delivered swiftly that can be summarised as follows:

Write a custom module for System A that will detect changes to the customer record. For each changed customer call the SOAP API of System B to synchronise the updated customer data into System B.

While this solves the problem swiftly it does have several deficiencies:

-

It isn't fault tolerant. What happens if System B is temporarily unreachable when the customer data is updated in System A? Of course, you could write code to achieve fault tolerance, but then you must duplicate that functionality into all of your point to point integrations over time.

-

The systems are tightly coupled. What happens if System B is upgraded and the SOAP API is dropped in favour of REST?

-

There isn't much scope for code reuse. If System C comes along expecting similar data but in a csv format we need to start from scratch.

-

Duplication of unmanaged reference data. Different systems often use slightly different values for things like status fields. If this data is duplicated into the target system for mapping purposes it is likely to quickly become out of date.

Over time an organisation that is reliant on more and more point to point integrations will find it increasingly difficult to manage, maintain and upgrade their integrated systems:

Reduce data quality problems

If your company has bad data entering a single system, a customer facing portal for example, it is damaging your business. It wastes time for the team either working around problems or remediating them and it reduces the keenness with which the team will base decisions on data and that leads to missed opportunities.

When data is synchronised across multiple applications the problem is multiplied because bad data is no longer polluting a single system but several and therefore hindering the members of multiple teams who use each of those systems rather than just one. Your business waste is multiplied.

An integration platform featuring a data quality firewall will demand and enforce high quality data as a minimum requirement for a system being integrated. The integration platform will be equipped with robust processes for rejecting and supporting the remediation of bad data that does not meet expected standards.

Your company benefits because your team is not wrestling with rubbish data across the organisation, you don't risk brand damage by contacting customers with inappropriate data and you are better able to compete in your market by trusting your data enough to draw valuable and actionable insights.

Furthermore, a high quality data set allows you to transform your customer relationships. By linking together your customer's footprints across all of your different systems and establishing your master data you can understand the true value of every customer relationship over their lifetime, even if they move house, alternate between using initials and names or exhibit other subtle differences in the data they provide you with each interaction.

The key is being able to identify those customers who might look a little bit different in each system, but actually they are the same person.

Once we have connected these data footprints we can accomplish two key tasks that are essential to customer centricity, which is a driving force behind some of the most successful companies today:

- 360 degree view of the customer

- Lifetime value of the customer

With this level of insight your customer services team can personalise their communications and even tailor the level of service appropriate to the value of the customer.

Make data accessible across the organisation

By making your data from systems across your IT landscape available in a consistent way you will promote innovation. Your development team can focus on building value if they no longer have to negotiate access to several systems, each using different protocols, administered by different people and outputting data in different formats.

Not only does innovation increase but project costs decrease because the complexity of working with multiple, different systems has been abstracted away from individual projects. All together this means you build more value, more quickly for less money.

It isn't only developers who can benefit from an integration platform though. Perhaps you have exposed a connected dataset via your integration platform to power your new mobile app. That dataset and the API endpoints that expose it are reusable assets. Why not allow your marketing team to use the same endpoint to fetch data that they can use as an input to their marketing campaigns?

Your integration platform opens the door for you to allow data citizens to fetch the data they need, when they need it. This can eliminate the need to submit requests to a central reporting function. Again your business benefits due to waste reduction and your team avoid the frustration of waiting for their requests to be fulfilled.

When your team is used to fetching the data they need when they need it the natural progression is to strive for data driven decision making. However, we are not only talking about decisions based on historical data that we feed into forecasting models. While that is of course possible we can also take real time streams of data, whether from omnichannel customer touchpoints, IoT devices, infrastructure telemetry or live market data and base business decisions and even customer interactions on what is happening right now.

Create business agility with re-usable APIs

With IT landscapes at many companies almost constantly changing and with the pace of that change accelerating your ability to move quickly can be a key determinant of your success.

Maximising the scope for reuse of integration building blocks is one way that you can achieve real business agility allowing you to integrate new systems at minimal cost, which is key to keeping your business at the forefront of technological change.

Taking the fictional theme park example from earlier, once they have created the API endpoints that allow membership registrations to flow from the onsite customer services application, it is an easy to ask to allow memberships taken over the phone or via ecommerce purchases to also flow into the same CRM through the same channel.

Legacy applications not designed to support modern workloads

Your legacy applications might be excellent at performing their intended task, whether that be recording financial ledgers, managing warehouses or optimising customer relationships. However, these systems are sometimes from an age where they were expected to run in isolation, perhaps receiving input data on a fixed schedule at a certain volume delivered by a particular member of the team.

Those systems are unlikely to have been architected to support the type of workload that can be generated by exposing a user interface to the public either as a web site, mobile application or any other kind of customer touchpoint.

Imagine a bank that is used to opening bank accounts face to face, filling in bits of paper and then manually loading that data into the system as a back office task. The bank decides the time is right to undertake a digital transformation project and so they commission an agency to build a website that has an online form and those form submissions will be routed directly to the back office system in real time. The leadership team are convinced this is the way forward and they sign off a huge marketing budget and fund a generous cash give away to new customers registering online.

The campaign goes live and the team celebrates as they see the first accounts being opened.

As word spreads across social media about this great opportunity the rate of account openings increases.

Then the problems start to bite. Customer services are starting to receive emails and phone calls saying the website is showing error pages and before long the back office system has crashed.

The back office system was not designed to handle that volume of concurrent connections and it couldn't cope.

By routing the registrations through an integration platform a tried and tested set of tools are used to control the speed at which we allow requests to be made to our systems. If one system is running faster than another can handle the integration platform can start to queue those requests and then deliver them at a manageable rate.

An integration platform not only allows you to connect your systems together but it allows you to do it safely and robustly.

Process automation increases efficiency and reduces data errors

Time and cost saving is often cited as the reason for automating processes. However, increased accuracy is often a bigger benefit to your business.

The reason for this is that poor accuracy can result in reputational damage for your business, or even fines for breaching legislation such as the Data Protection Act. If you send your customer somebody else's data, or just erroneous data your competency will be questioned and your brand will be damaged.

Unfortunately the problem doesn't stop there because if such an error occurs, or even if the possibility exists, your team will spend time building in manual checks and balances to try to detect any errors before they are noticeable. So accuracy problems actually encompass further time and cost problems.

It is an undeniable truth that humans will make mistakes when working with data. Maybe they are working too fast in a spreadsheet, maybe they are tired, maybe the Ctrl key on their keyboard is sticky with fruit juice meaning a simple Ctrl+C, Ctrl+V didn't behave as expected and went unnoticed or maybe they chose the wrong "Graham" when the email client prompted a recipient from the address book for auto completion.

Automating such tasks via an integration platform with a reliable set of inputs means we can guarantee an accurate and timely set of outputs.

De-risk application procurement/retirement

When you procure a new system you face a decision, do you phase it in gradually or do you go for a big bang cut over. Sometimes an integration platform can allow you to de-risk this process by supporting the parallel running of old and new applications.

Potentially this can allow some functions to shift across to the new system for early value realisation with gradual rollout making the organisational change more manageable. But it can also give you a backup plan where you can revert to the old system if things do not go to plan on the new system.

The easier and less risky it is for your company to procure new systems the more inclined you will be to adopt new technologies and stay ahead of your competition.

Summary

The most successful businesses today treat data as a highly valuable asset. They uncover relationships across their various datasets, they let data inform their decision making and they use digital techniques to automate processes to maximise operational efficiency.

IT landscapes are becoming increasingly complex and looking at system integrations as isolated projects will tend to result in a tangled web of non-standard integrations that are difficult to monitor and maintain.

An integration platform brings structure to your system integrations in order to leverage your data, promote innovation and boost business agility.

What Next?

Learn more about how our Systems Integration Consulting Service can help your business to liberate your data, optimise efficiency, automate processes and drive sales. If you're ready to talk, get in touch today for a no-strings chat.